-

How much power does

AI need? -

In order to realize the true era of AI, we need to resolve the issue of immense power consumption. Otherwise, the difference in wealth of AI users will lead to a difference in AI usage, as some point out. That is why AI leaders such as Google and IBM are making huge investments in energy research as well as AI.

AlphaGo, which faced Lee Se-dol in March 2016, was capable of running 30 trillion computations per second with whole numbers (1, 2, 3...). Still, the excellent computations that beat humans required enormous amount of power and large-capacity servers. AlphaGo used 1,202 CPUs (central processing unit) and 176 GPUs (graphics processing unit). Running such system requires power of approximately 170 kW based on simple calculation (1202 x 100 W + 176 x 300 W). Compared to the 20 W that a human brain needs, it is inefficiency beyond description. In fact, the benefits of AI may be overshadowed by the energy cost.

Fast computation was what mattered in the past, but these days it is about computing with less power, experts say. AI in every home will put an enormous burden on the power plant, not to mention the bills; thus resulting in the gap in information usage due to the wealth gap.

Then, what are Google and IBM looking into for their energy research? The Watson project of IBM also requires tons of power in order for it to be universally available. Thus, IBM has been working on a lithium air battery development project dubbed “Battery 500” since 2009. It is not even easy to start an AI system in the current mobile environment, which mostly utilizes lithium-ion batteries, so we need a technology to minimize power consumption such as a lithium air battery that drastically boosts energy efficiency through the chemical reactions of lithium and oxygen.

Meanwhile, Google started the development of an electric inverter. An inverter is a device converting solar rays into electric power for our everyday use. Managing data centers — the birthplace of AI — requires a huge amount of power, which Google intends to replace gradually with sustainable energy sources such as solar power.

-

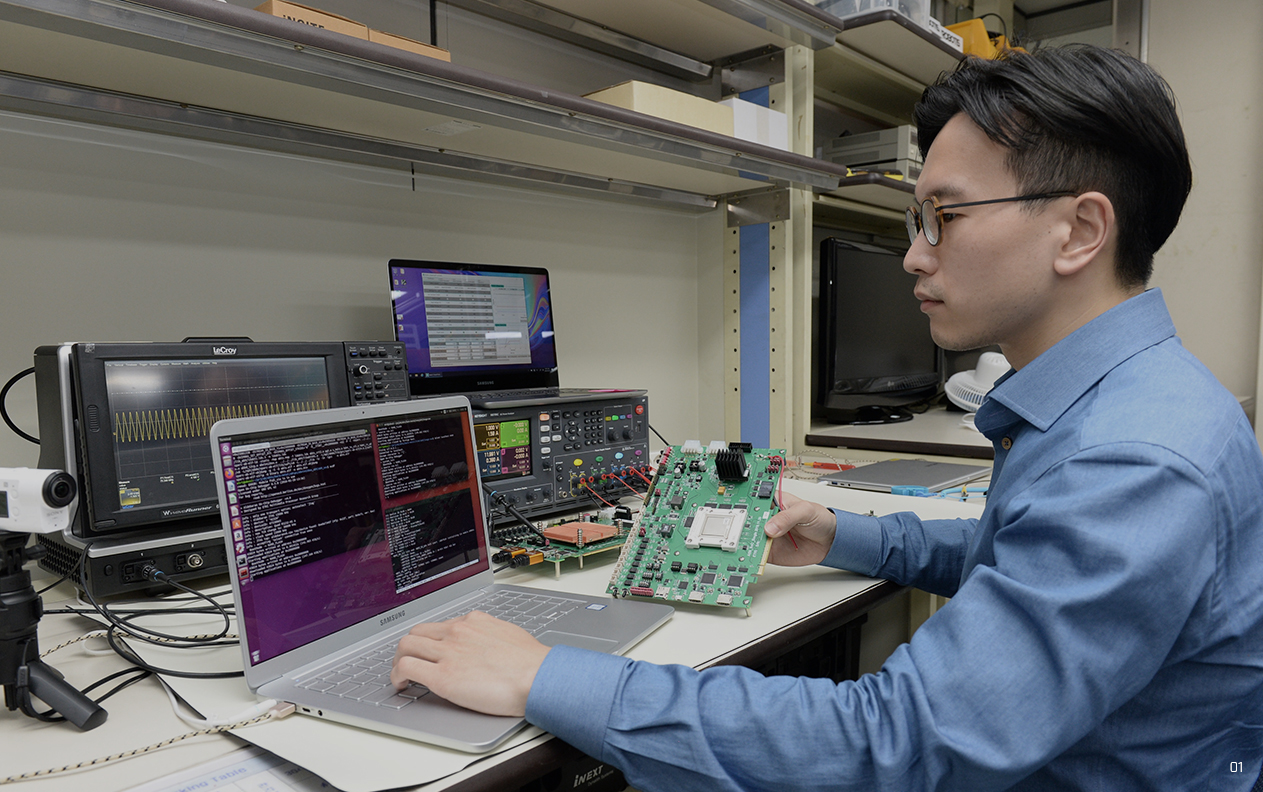

01

ETRI developed the NPU optimized for deep

learning-based calculations.

-

Two birds with one stone

— Development of

AI semiconductor -

ETRI developed the NPU (Neural Processing Unit) in late February with more than a decade worth of know-how. It is the “AB9” chip, the new version of ETRI’s proprietary high-performance processor Aldebaran. It features greater computing power than commercial products while reducing power consumption by a large margin. It is expected to be applied to applications such as mobile platforms, autonomous vehicles, intelligent robots, and drones and to mark a milestone in the history of Korean non-memory semiconductors. The NPU basically reproduces and accelerates the learning and inference processes of humans, similar to AlphaGo of Google.

The chip developed by ETRI is capable of 40 TFLOPS of computation while consuming only 15 W of power. Its performance per power unit is up to 25 times better than the existing commercial products, but power consumption is 20 times smaller.

The price competitiveness is also remarkable. One commercial GPU costs USD 8,000 ~ 10,000, but the ETRI chip will be available for a mere hundreds of USD, or up to 50 times cheaper.

-

Aldebaran

The new version of CPU that ETRI has

been working onNPU

Neural Processing Unit, capable of

running similarly to the neural networks

of a human brain

-

Intelligent Semiconductor Research Center working on a chip capable of simultaneous learning and inference.

-

Chips capable of

simultaneous learning

and inference -

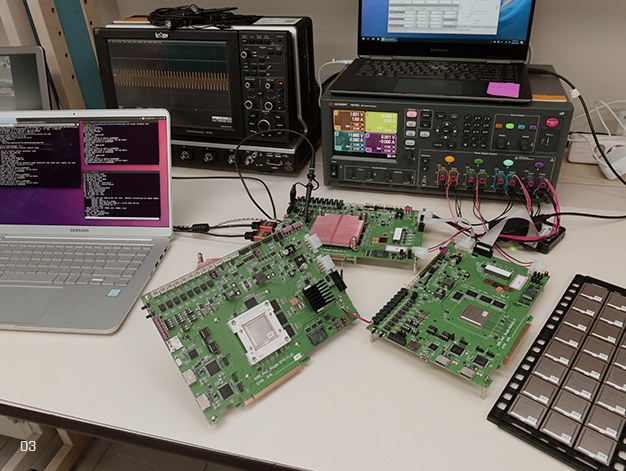

The team applied the 28-nano process to make the chip as small as possible. It is amazing that a chip as small as 17 mm x 23 mm is capable of 40 TFLOPS of computation. It can also be used in diverse applications and easily commercialized as it is optimized for deep learning computations. The advancement of computation performance to process large amounts of data will be essential in the era of the 4th industrial revolution.

The team determined the dimensions and number of matrix calculators through simulations to maximize power efficiency while focusing on optimizing the design, such as distributing operation time per module in parallel. The chip is expected to be used in data center servers for AI-related services as an on-board type. According to ETRI, embedding one unit of this chip in the autonomous vehicle will enable simultaneous safety-related controls such as recognition of pedestrians, lanes, and traffic lights by receiving images from the cameras of the car.

-

02

The Aldebaran chip of ETRI with high

computation performance but small

size of 17 mm x 23 mm03

Aldebaran chip capable of 40 TFLOPS

of computations at 15 W power

-

The AI chip of ETRI can be used for performance power, remote healthcare, finance and banking, and face/behavior recognition as well as AI speakers and autonomous vehicles. It will also be of great help in localizing product components with deep learning and creating additional values.

ETRI aims to develop a chip capable of simultaneous learning and inference. The current AI uses simple inferences to answer a plain question such as “What will today’s weather be like?” Nonetheless, ETRI plans to advance the inference capability to the level of relating the climate change issue to the current weather and making the relevant arguments.

-

Teraflops

(TFLOPS)

The index of computations per second.

1 TFLOPS is equivalent to 1 trillion

computations per second